Reduced-Order Nonlinear Observer for Range Estimation

Abstract

A reduced-order nonlinear observer is developed to estimate the distance from a moving camera to a feature point on a static object (i.e., range identification), where full velocity and linear acceleration feedback of the calibrated camera is provided. The contribution of this work is to develop a global exponential range observer which can be used for a larger set of camera motions than existing observers. The observer is shown to be robust against external disturbances even if the target object is moving or the camera motion is perturbed. The presented observer identifies the range provided an observability condition commonly used in literature is satisfied and is shown to be exponentially stable even if camera motion satisfies a less restrictive observability condition. A sufficient condition on the observer gain is derived to prove stability using a Lyapunov-based analysis. Experimental results are provided to show robust performance of the observer using an autonomous underwater vehicle (AUV).

Experimental Results

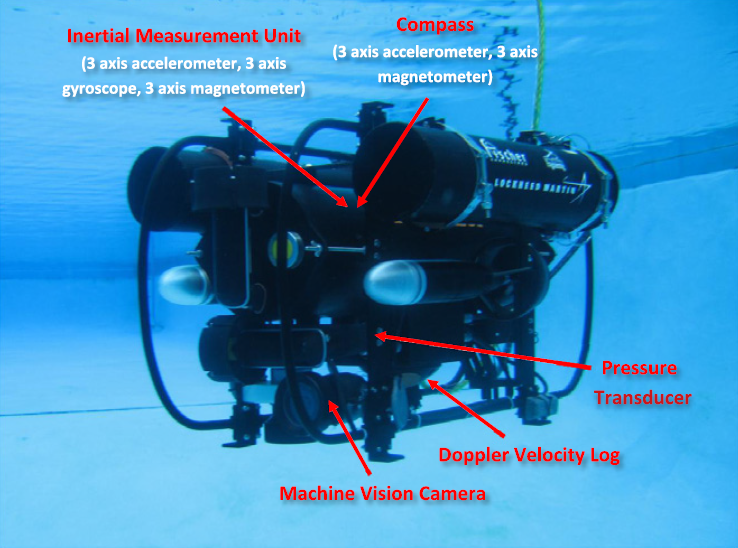

Experiments are conducted to estimate the range of a 9-in.

Mooring buoy floating in the middle of a water column as observed

by a camera rigidly attached to an autonomous underwater vehicle

(AUV). Fig. 1a shows the AUV experimental platform. The AUV is

equipped with a Matrix Vision mvBlueFox-120a color USB camera,

a doppler velocity log (DVL), a pressure transducer, a compass and

an inertial measurement unit (IMU). Two computers running

Microsoft Windows Server 2008 are used on the AUV. One computer is dedicated for running image processing algorithms and

the other computer executes sensor data fusion, low level component communication and control, and mission planning. An unscented Kalman filter (UKF) is used to fuse the IMU, DVL and

pressure transducer data at 100 Hz to accurately estimate the position, orientation and velocity of the AUV with respect to an inertial frame by correcting the IMU bias. This position data is used to

compare the results of the observer with a relative ground truth

measurement of the AUV by rotating the localized AUV position

into the camera fixed frame. The buoy is tracked in the video image

of dimension 640x480 using a standard feature tracking algorithm as shown in Fig. 1b, and pixel data of the centroid of the buoy

is recorded at 15 Hz.

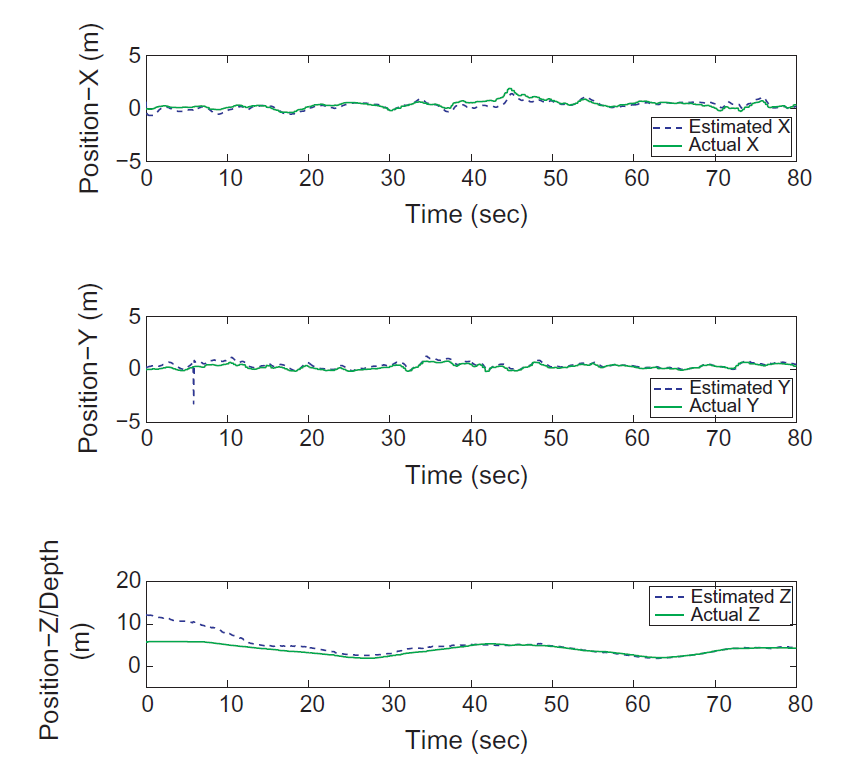

The linear and angular velocity, and linear acceleration data obtained from the UKF is logged at the camera frame rate. Using the

velocity, linear acceleration and pixel data obtained from the AUV

sensors, the range of the buoy is estimated with respect to the camera. The observer equations are

integrated using a Runge-Kutta integrator with a time step of

1/15 s. A comparison of the estimated range with the ground truth

measurement is shown in Fig. 2. Also in Fig. 2, it is seen that the feature tracking

algorithm fails for several frames near time t = 6 s. The range estimation algorithm shows robust performance even in the presence

of feature tracking errors.

Publications

A. Dani, N. Fisher, Z. Kan, and W. E. Dixon, "Globally Exponentially Stable Observer for Vision-based Range Estimation," Mechatronics , Special Issue on Visual Servoing, Vol. 22, No. 4, pp. 381-389 (2012).

More Publications