Cooperative Visual Servo Control for Robotic Manipulators

Introduction

In this project, the performance of a recently developed cooperative visual servo controller was examined that enables a robot manipulator to track objects moving in the task-space with an unknown trajectory via visual feedback. The goal of this project is to demonstrate the cooperative use of a fixed camera and a camera-in-hand to enable tracking performance by the manipulator despite the fact that neither camera is calibrated.

Motivation

Typical DOE environmental management robotics require a highly experienced human operator to remotely guide and control every joint movement. The human operator moves a master manipulator and the resulting feedback is utilized by the robotic controller

To reduce the need for a human operator to control every movement of the robot, this project targets new methods to provide robots with improved perception capabilities by using sensor information obtained from a camera system to directly control the robot

Methodology

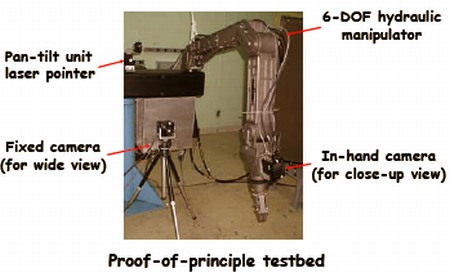

The project is motivated by the desire to automate robotic motion in unstructured environments for decommissioning and decontaminating operations by the DOE. As a means to integrate the current research into an existing DOE robotic system, the visual servo controller was implemented on a robotic manipulator testbed that has played a vital role in several DOE teleoperation-based applications. Since the existing robotic testbed is controlled at the force/torque level by a closed (black box) system, the complete robust torque controller could not be implemented without completely replacing the existing system. Rather than replacing the existing controller, a plug-and-play retrofit was implemented.

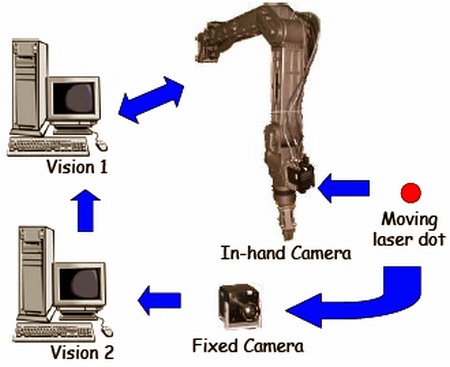

To facilitate the communication between the computers, cameras, and the robot, a dedicated client/server architecture was developed. Uncalibrated information regarding the image-space position of the laser dot was obtained by the in-hand and fixed cameras. Vision 1 was responsible for determining the pixel information for the in-hand camera and and Vision 2 was responsible for determining the pixel information from the fixed camera. Vision 1 was also responsible for obtaining joint measurements from the robot, receiving pixel information from Vision 2, computing the desired robot trajectory, and delivering the computed desired joint positions to the robot controller.

Results

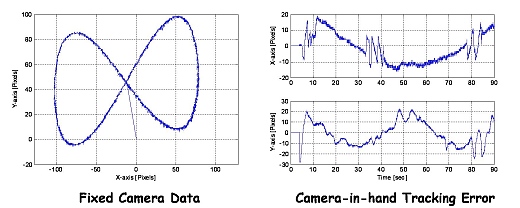

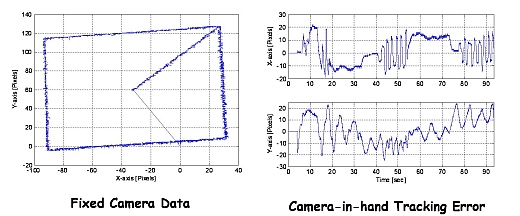

The performance of the cooperative visual servoing controller was experimentally demonstrated. The manipulator was able to track a laser dot moving in some unknown manner through the use of feedback from the fixed and in-hand camera.

Future Work

Motivated by the desire to overcome the restriction that the manipulator be confined to planar motion, current efforts are targeting the use of image homography-based approaches to achieve 6 degree-of-freedom object motion tracking. Subsequent efforts will also address other robotic systems such as mobile robots. Visual servoing for other robotic systems such as mobile robots will present new challenges due to the differences in the camera motion and possible constraints imposed on the robot motion