Vision-based localization of a wheeled mobile robot for greenhouse applications: A daisy-chaining approach

Introduction

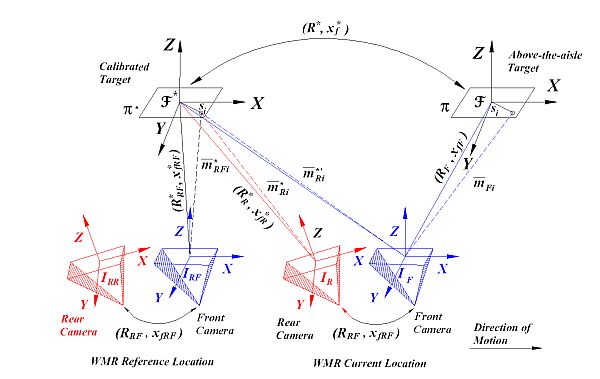

The Euclidean position and orientation of a wheeled mobile robot (WMR) is typically required for autonomous selection and control of agricultural operations in a greenhouse. A vision-based localization scheme is formulated as a desire to identify the position and orientation of a WMR navigating in a greenhouse by utilizing the image feedback of the above-the-aisle targets from an on-board camera. An innovative daisy chaining strategy is used to find the local coordinates of the above-the-aisle targets in order to localize a WMR. In contrast to typical camera configurations used for vision-based control problems, the localization scheme in this paper is developed using amoving on-board camera viewing reseeding targets. Multiviewphotogrammetric methods are used to develop relationships between different camera frames and WMR coordinate systems. Experimental results demonstrate the feasibility of the developed geometric model.

Camera coordinate frame relationships

Experimental Results

The experimental results are provided to illustrate the performance of the daisy chaining-based wheeled mobile robot localization scheme. The presented experiment consists of a forward and a reverse looking pin-hole camera mounted on a non-autonomousmoving platform looking at the overhanging targets. The hardware for the experimental testbed consists of the following three main components: (1) forward and reverse looking camera configuration; (2) overhanging targets; and (3) image processing workstation. The cameras used for this configuration are KT&C make (model: KPCS20-CP1) fixed focal length, color CCD cone pinhole cameras. The image output from the cameras is NTSC analog signal which is digitized using universal serial bus (USB) frame grabbers. The overhanging targets are chosen to be planar rectangular patches of size 300 mm Χ 150 mm and the feature points are considered to be located at the corners of the rectangle, while the targets are placed 411mmapart from each other. The placement and the size of the target would depend on the selection of a camera. A camera having large field-of-view would require larger targets for better positioning accuracy, but the number of targets required would be less, whereas a camera having larger focal length (i.e., reduced field-of-view) would require smaller targets relatively close to each other while the total number of targets required would depend on the area and the number of aisles of the greenhouse. It has been observed that colored rectangular targets are easy to identify using the traditional image processing algorithms and the required feature points can be selected at the corners of the rectangular target.

The third components is the image processingworkstation, which is used for image processing and vision-based state estimation. The image processing workstation operates on a Microsoft Windows XP platform based PC with 3.06GHz Intel Pentium 4 processor and 768MBRAM. For the presented experiment, the image processing and state estimation is performed in non-real time using MATLAB.

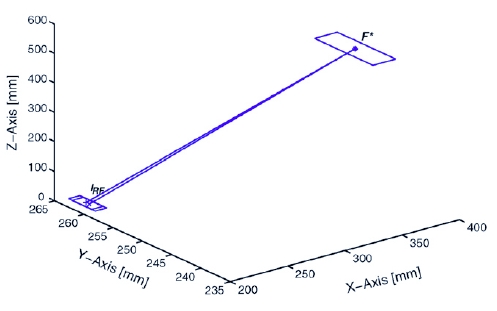

Case I: Calibrated target F* is in the field-of-view of the forward looking camera frame IRF

Fig. 1 indicates the actual and the experimentally identified position and orientation of a WMR while the forward looking camera

IRF viewing the calibrated target F*.

Fig. 1: Euclidean plot indicating the identified pose of a WMR (denoted by +), the actual pose of a WMR IRF (denoted by x) while viewing the target F* (denoted by *) by the forward looking camera.

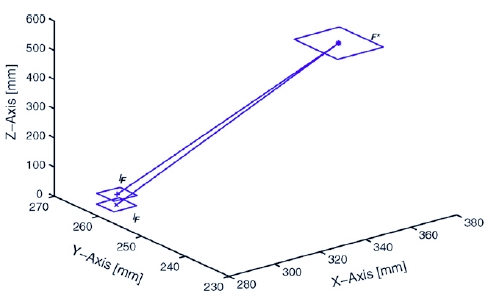

Case II: Calibrated target F* is in the field-of-view of the forward looking camera frame IF

Fig. 2 indicates the actual and the experimentally identified position and orientation of a WMR

while the forward looking camera IF viewing the calibrated target F*.

Fig. 2: Euclidean plot indicating the identified pose of a WMR (denoted by +), the actual pose of a WMR IF (denoted by x) while viewing the target F* (denoted by *) by the forward looking camera.

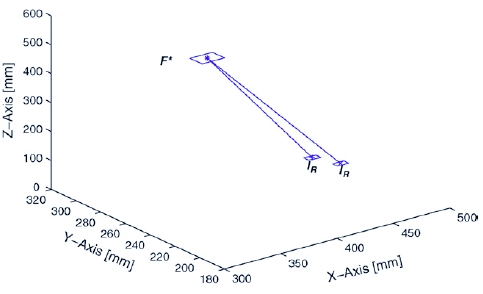

Case III: Calibrated target F* is in the field-of-view of the reverse looking camera frame IR

Fig. 3 indicates the actual and the experimentally identified position and orientation of a WMR while the reverse looking camera IR viewing the calibrated target F*.

Fig. 3: Euclidean plot indicating the identified pose of a WMR IR (denoted by +), the actual pose of a WMR IR (denoted by x) while viewing the target F* (denoted by *) by the reverse looking camera.

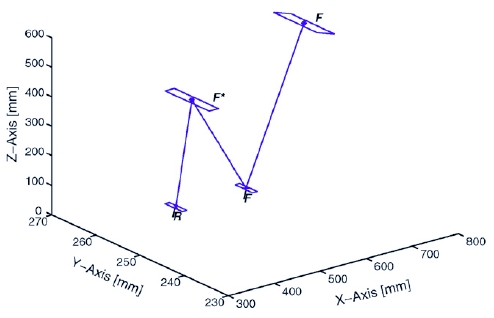

Case IV: Calibrated target F* is in the field-of-view of the reverse looking camera frame IR and above-the-aisle target F is in the field-of-view of the forward looking camera frame IF

Fig. 4 indicates the experimentally identified position and orientation of above-the-aisle target F while the forward looking

camera IF viewing the target F and reverse looking camera IR viewing the calibrated target F*.

Fig. 4: Euclidean plot indicating the identified position and orientation of above-the-aisle target F (denoted by *), while the forward looking camera IF (denoted by +) viewing the target F and the reverse looking camera IR viewing the calibrated target F* (denoted by x).

Conclusion

In this paper, the position and orientation of aWMR is identified with respect the greenhouse inertial frame of reference using a vision-based state estimation strategy. To achieve the result, multiple views of a reference objects were used to develop Euclidean homographies. By decomposing the Euclidean homographies into separate translation and rotation components, reconstructed Euclidean information was obtained for calibration of unknown targets for localization of a WMR. The impact of this paper is a new framework to identify the pose of a moving WMR through images acquired by a moving camera, which would be beneficial for spatial selection and autonomous operations in a greenhouse. Experimental results verify the daisy chaining-based approach for localization scheme presented in this paper.

Publications

S.S. Mehta, T.F. Burks, W.E. Dixon Vision-based localization of a wheeled mobile robot for

greenhouse applications: A daisy-chaining approach, Transactions on IFAC Computers and Electronics in Agriculture 2007, accepted.