| Image-based State Estimation |

Imaging systems have become a ubiquitous sensor for autonomous systems.

An image captured from a camera (or video stream) provides a dense data set that can be interpreted to understand relative position and orientation

information about objects in the field-of-view (FOV). However, an image only provides two-dimensional information, and state estimation is required to

recover the unmeasured distance from the camera. A challenge to the state estimation problem is that the image dynamics are inherently nonlinear (and nonlinear in the unmeasured state)

and can be uncertain (e.g., uncertain camera calibration). NCR research efforts focus on the use of nonlinear observer methods and image geometry methods to

solve problems such as Structure-from-Motion (SfM), Structure-and-Motion (SaM), range identification, Simultaneous Localization and Mapping (SLAM), and visual odometry problems.

Recent and on-going efforts focus on solving the above problem even during intermittent image feedback when features in the scene are temporary occluded. |

Visual Servo Control |

Unique control challenges exist when attempting to use image feedback in a closed-loop control system. For example, the image coordinates can be directly used as feedback (i.e., so-called image-based visual servo control), which provides a heuristic better chance of the features remaining in the field-of-view (FOV) since they are being regulated towards the center of the image. However, satisfying the desired feature trajectories can require large camera movements that are not physically realizable. Furthermore, the control laws can suffer from unpredictable singularities in the image Jacobian. Alternatively, the image coordinates can be related to a Euclidean coordinate system (i.e., so-called position-based visual servo control). Using the relative Euclidean coordinates typically yields physically valid camera trajectories, with known (and hence avoidable) singularities and no local minima. However, there is no explicit control of the image features, and features may leave the field of view, resulting in task failure. NCR efforts focus on the use of homography-based (or 2.5D) based approaches that combine the strengths of IBVS and PBVS to yield realizable control trajectories that also use image coordinates in the feedback. Such approaches have been used for develop image-based controllers to track desired image trajectories for various autonomous systems. A key innovation was the development of daisy-chaining as a means to relate current image features to a keyframe for visual odometry. Research efforts also focus on means to ensure features remain in the FOV during task execution, provide robustness when they leave the FOV, and path planning based on FOV constraints. |

Geolocation |

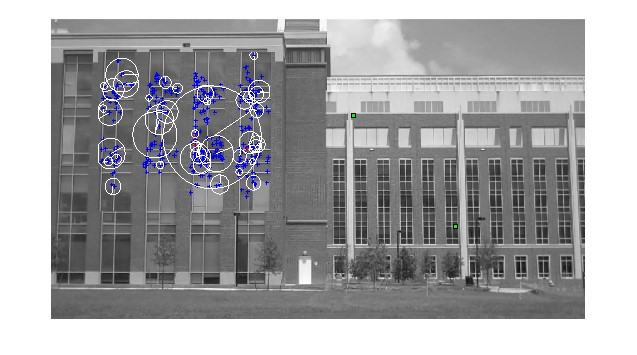

The focus of this project is to develop methods

that can be used to determine the geographic location from which an arbitrary hand-held video was taken. Unlike image matching-based

approaches which are ill-suited for application with dramatically different image perspectives, NCR research efforts focus on methods that

can extract relative feature point coordinates from the underlying nonlinear image dynamics inherent to any video, reconstruct the

scene geometry, and then compare with geometry available from overhead imagery to obtain the geolocation. This approach converts

the camera into a Cartesian sensor that generates a three dimensional geometric map of the scene that can be compared to other

geometric maps derived from Nadir images or other auxiliary information. The proposed approach includes: a feature point extraction,

refinement, and position/orientation estimation method; nonlinear observer methods to determine the camera motion and structure

in the scene; nonlinear optimization methods used for error correction and structure estimation refinement; and the use of image

features to reduce possible geolocation matches within a region of interest. |

Sponsors |

| Ongoing Projects

AFOSR: Center of Excellence in Assured Autonomy in Contested Environments AFOSR: A Switched Systems Approach for Navigation and Control with Intermittent Feedback AFRL: Privileged Sensing Framework Completed Projects Prioria: Observer Methods for Image Based Autonomous Navigation Florida High Corridor Matching Funds Laser Technology: A Nonlinear Approach to Image-Based Speed Estimation BARD (US Isreal AG R&D Fund):Enhancement of Sensing Technologies for Selective Tree Fruit Identification and Targeting in Robotic Harvesting Systems DOE: University Research Program In Robotics For Environmental Restoration & Waste Management DARPA: Symbiosis of Micro-Robots for Advanced In-Space Operations Innovative Automation Technologies Inc: Micro Air Vehicle Tether Recovery Apparatus (MAVTRAP): Image-based MAV Capture and Tensegrity System NGA: Nonlinear Estimation Methods for Geolocation of Hand-held Video AFRL: Vision-Based Guidance and Control Algorithms Research UCF: Image-Based Motion Estimation and Tracking for Collaborative Space Assets AFRL: Simultaneous Localization and Mapping Eclipse Energy Systems: Eclipse Energy Systems Camera Project Prioria: Structure, Motion, and Geolocation Estimation for Autonomous Vehicles NGA: Nonlinear Observer and Learning Methods for Geolocation: A GEOINT Visual Analytics Tool DOA Natl Inst of Food & AG: NRI: An Integrated Machine Vision-based Control for Citrus Fruit Harvesting Using Enhanced 3D Mapping, Path Planning and Servo Control Florida High Corridor Matching Funds |

Related NCR Image Feedback Publications |

% Encoding: windows-1252

@InProceedings{Aiken2006,

Title = {Lyapunov-Based Range Identification for a Paracatadioptric System},

Author = {D. Aiken and S. Gupta and G. Hu and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2006},

Address = {San Diego, California},

Month = {Dec.},

Pages = {3879-3884},

Keywords = {vision estimation, Guoqiang},

Timestamp = {2016.06.02},

Url1 = {http://ncr.mae.ufl.edu/papers/TAC08.pdf}

}

@InProceedings{Allen2019,

Title = {Cadence Tracking For Switched {FES}-Cycling With Unknown Time-Varying Input Delay},

Author = {B. Allen and C. Cousin and C. Rouse and W. E. Dixon},

Booktitle = {Proc. ASME Dyn. Syst. Control Conf.},

Year = {2019},

Month = {October},

Keywords = {FES, NMES, BrendonAllenPub}

}

@InProceedings{Allen.Cousin.ea2019,

author = {B. C. Allen and C. Cousin and C. Rouse and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Cadence Tracking for Switched {FES} Cycling with Unknown Input Delay},

year = {2019},

address = {Nice, Fr},

month = {Dec.},

pages = {60-65},

keywords = {robotpub, NMES, FES, delay, BrendonAllenPub},

owner = {wdixon},

}

@InProceedings{Allen.Stubbs.ea2020,

Title = {Robust Cadence Tracking for Switched {FES}-Cycling with an Unknown Time-Varying Input Delay Using a Time-Varying Estimate},

Author = {B. C. Allen and K. Stubbs and W. E. Dixon},

Booktitle = {IFAC World Congr.},

Year = {2020},

Keywords = {NMES, Robotpub, BrendonAllenPub}

}

@InProceedings{Andrews.Klotz.ea2014,

author = {L. Andrews and J. Klotz and R. Kamalapurkar and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Adaptive dynamic programming for terminally constrained finite-horizon optimal control problems},

year = {2014},

pages = {5095--5100},

keywords = {Optimal, NN, theory, learning, aeropub},

owner = {wdixon},

timestamp = {2017.05.08},

}

@InProceedings{Anubi.Crane.ea2012,

author = {O. Anubi and C. Crane and W. E. Dixon},

booktitle = {Proc. ASME Dyn. Syst. Control Conf.},

title = {Nonlinear Disturbance Rejection For Semi-active MacPherson Suspension System},

year = {2012},

address = {Fort Lauderdale, Florida},

month = {Oct.},

keywords = {Optimal, NN, theory, learning},

owner = {wdixon},

timestamp = {2017.05.08},

}

@InProceedings{Barnette2008,

author = {G. Barnette and J. Shea and W. E. Dixon},

booktitle = {Mil. Commun. Conf.},

title = {Sensing and Control in a Bandwidth-Limited Systems: A Kalman Filter Approach},

year = {2008},

address = {San Diego, CA},

month = {Jan.},

keywords = {networkpub, Optimal, theory},

timestamp = {2017.05.08},

}

@InProceedings{Barnette2010,

author = {G. L. Barnette and J.M. Shea and W. E. Dixon},

booktitle = {Mil. Commun. Conf.},

title = {Using Kalman Innovations for Transmission Control of Location Updates in a Wireless Network},

year = {2010},

address = {San Jose, CA},

pages = {2197-2202},

keywords = {networkpub, Optimal, theory},

}

@Article{Behal2002,

author = {A. Behal and D. M. Dawson and W. E. Dixon and Y. Fang},

journal = {IEEE Trans. Autom. Control},

title = {Tracking and Regulation Control of an Underactuated Surface Vessel with Nonintegrable Dynamics},

year = {2002},

pages = {495-500},

volume = {47},

keywords = {robotpub, aeropub},

timestamp = {2017.05.08},

url1 = {http://ncr.mae.ufl.edu/papers/tac02.pdf},

}

@InProceedings{Behal2000,

Title = {Tracking and Regulation Control of an Underactuated Surface Vessel with Nonintegrable Dynamics},

Author = {A. Behal and W.E. Dixon and D. M. Dawson and Y. Fang},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2000},

Address = {Sydney, Australia},

Month = {Dec.},

Pages = {2150-2155},

Keywords = {robotpub, aeropub},

Timestamp = {2017.05.08}

}

@Book{Behal2009,

author = {A. Behal and W. E. Dixon and B. Xian and D. M. Dawson},

publisher = {Taylor and Francis},

title = {Lyapunov-Based Control of Robotic Systems},

year = {2009},

issn = {0849370256},

keywords = {Robotpub, theory},

timestamp = {2017.05.08},

}

@Article{Behal2005,

Title = {Adaptive Position and Orientation Regulation for the Camera-in-Hand Problem},

Author = {A. Behal and P. Setlur and W. E. Dixon and D. M. Dawson},

Journal = {J. Robotic Syst.},

Year = {2005},

Pages = {457-473},

Volume = {22},

Keywords = {robotpub, vision estimation},

Url = {http://ncr.mae.ufl.edu/papers/jrs05.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/jrs05.pdf}

}

@PhdThesis{Bell2019,

Title = {Target Tracking In Unknown Environments Using a Monocular Camera Subject To Intermittent Sensing},

Author = {Z. Bell},

School = {University of Florida},

Year = {2019},

Keywords = {robotpub, vision estimation, aeropub},

Url1 = {http://ncr.mae.ufl.edu/dissertations/belldissertation.pdf}

}

@PhdThesis{Bellman2015,

Title = {Control of Cycling Induced by Functional Electrical Stimulation: A Switched Systems Theory Approach},

Author = {M. Bellman},

School = {University of Florida},

Year = {2015},

Keywords = {NMES, cycle, switched},

Url1 = {http://ncr.mae.ufl.edu/dissertations/Bellman.pdf}

}

@InProceedings{Bell.Deptula.ea2018,

Title = {Velocity and Path Reconstruction of a Moving Object Using a Moving Camera},

Author = {Z.I. Bell and P. Deptula and H.-Y. Chen and E. Doucette and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2018},

Pages = {5256-5261},

Keywords = {robotpub, vision estimation},

Owner = {wdixon},

Timestamp = {2018.01.22}

}

@Article{Bell.Nezvadovitz.ea2020,

author = {Z. Bell and J. Nezvadovitz and A. Parikh and E. Schwartz and W. Dixon},

journal = {IEEE J. Ocean Eng.},

title = {Global Exponential Tracking Control for an Autonomous Surface Vessel: An Integral Concurrent Learning Approach},

year = {2020},

month = apr,

number = {2},

pages = {362-370},

volume = {45},

keywords = {robotpub, learning, aeropub, RT2},

url1 = {http://ncr.mae.ufl.edu/papers/JOE20.pdf},

}

@InProceedings{Bell.Parikh.ea2016,

Title = {Adaptive Control of a Surface Marine Craft with Parameter Identification Using Integral Concurrent Learning},

Author = {Z. Bell and A. Parikh and J. Nezvadovitz and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2016},

Pages = {389-394},

Keywords = {robotpub, learning, aeropub},

Owner = {wdixon},

Timestamp = {2018.01.11}

}

@InProceedings{Bell.Chen.ea2017,

Title = {Single Scene and Path Reconstruction with a Monocular Camera Using Integral Concurrent Learning},

Author = {Z. I. Bell and H.-Y. Chen and A. Parikh and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2017},

Pages = {3670-3675},

Keywords = {robotpub, vision estimation},

Owner = {wdixon},

Timestamp = {2018.01.11}

}

@InProceedings{Bell.Harris.ea2019,

author = {Z. I. Bell and C. Harris and R. Sun and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Structure and Velocity Estimation of a Moving Object via Synthetic Persistence by a Network of Stationary Cameras},

year = {2019},

address = {Nice, Fr},

month = {Dec.},

pages = {1601-1606},

keywords = {robotpub, vision estimation, Runhan},

owner = {wdixon},

}

@Article{Bellman.Cheng.ea2016,

Title = {Switched Control of Cadence During Stationary Cycling Induced by Functional Electrical Stimulation},

Author = {M. J. Bellman and T. -H. Cheng and R. J. Downey and C. J. Hass and W. E. Dixon},

Journal = {IEEE Trans. Neural Syst. Rehabil. Eng.},

Year = {2016},

Number = {12},

Pages = {1373-1383},

Volume = {24},

Keywords = {NMES, switched, cycle},

Owner = {wdixon},

Timestamp = {2016.12.07},

Url1 = {http://ncr.mae.ufl.edu/papers/TNSRE16.pdf}

}

@InProceedings{Bellman.Cheng.ea2014a,

Title = {Cadence Control of Stationary Cycling Induced by Switched Functional Electrical Stimulation Control},

Author = {M. J. Bellman and T.-H. Cheng and R. Downey and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2014},

Keywords = {NMES, cycle, switched},

Owner = {wdixon},

Timestamp = {2016.03.15}

}

@InProceedings{Bellman.Cheng.ea2014,

Title = {Stationary Cycling Induced by Switched Functional Electrical Stimulation Control},

Author = {M. J. Bellman and T.-H. Cheng and R. J. Downey and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2014},

Pages = {4802-4809},

Keywords = {NMES, cycle, switched},

Owner = {wdixon},

Timestamp = {2016.03.15}

}

@Article{Bellman.Downey.ea2017,

Title = {Automatic Control of Cycling Induced by Functional Electrical Stimulation with Electric Motor Assistance},

Author = {M. J. Bellman and R. J. Downey and A. Parikh and W. E. Dixon},

Journal = {IEEE Trans. Autom. Science Eng.},

Year = {2017},

Month = {April},

Number = {2},

Pages = {1225-1234},

Volume = {14},

Doi = {10.1109/TASE.2016.2527716.},

Keywords = {NMES, cycle, switched},

Owner = {wdixon},

Timestamp = {2017.04.12},

Url1 = {http://ncr.mae.ufl.edu/papers/TASE17.pdf}

}

@PhdThesis{Bhasin2011b,

author = {S. Bhasin},

school = {University of Florida},

title = {Reinforcement Learning and Optimal Control Methods for Uncertain Nonlinear Systems},

year = {2011},

keywords = {RISE, Optimal, delay, NN, theory, learning, fault},

timestamp = {2017.05.17},

Url1 = {http://ncr.mae.ufl.edu/dissertations/bhasin.pdf}

}

@InBook{Bhasin2008,

Title = {Robot Manipulators},

Author = {S. Bhasin and K. Dupree and W. E. Dixon},

Chapter = {Control of Robotic Systems Undergoing a Non-Contact to Contact Transition},

Editor = {M. Ceccarelli},

Pages = {113-136},

Publisher = {In-Tech},

Year = {2008},

ISSN = {9789537619060},

Keywords = {robotpub},

Timestamp = {2017.05.08},

Url = {http://books.i-techonline.com/downloadpdf.php?id=5583}

}

@InProceedings{Bhasin2008a,

author = {S. Bhasin and K. Dupree and P. Patre and W. E. Dixon},

booktitle = {Proc. ASME Dyn. Syst. Control Conf.},

title = {Neural Network Control of a Robot Interacting with an Uncertain Hunt-Crossley Viscoelastic Environment},

year = {2008},

address = {Ann Arbor, Michigan},

month = {Sep.},

keywords = {RISE, Robotpub, NN, learning},

timestamp = {2017.05.08},

}

@Article{Bhasin2011a,

author = {S. Bhasin and K. Dupree and P. M. Patre and W. E. Dixon},

journal = {IEEE Trans. Control Syst. Technol.},

title = {Neural Network Control of a Robot Interacting with an Uncertain Viscoelastic Environment},

year = {2011},

number = {4},

pages = {947-955},

volume = {19},

keywords = {Robotpub, Impact, NN, theory},

timestamp = {2017.05.08},

url = {http://ncr.mae.ufl.edu/papers/CST11.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/CST11.pdf},

}

@InProceedings{Bhasin2009,

author = {S. Bhasin and K. Dupree and Z. D. Wilcox and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Adaptive Control of a Robotic System Undergoing a Non-Contact to Contact Transition with a Viscoelastic Environment},

year = {2009},

address = {St. Louis, Missouri},

month = {Jun.},

pages = {3506-3511},

keywords = {Robotpub, Impact},

timestamp = {2017.05.08},

}

@InProceedings{Bhasin2010b,

author = {S. Bhasin and M. Johnson and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {A model-free robust policy iteration algorithm for optimal control of nonlinear systems},

year = {2010},

address = {Atlanta, GA},

pages = {3060-3065},

keywords = {Optimal, NN, theory, learning},

}

@Article{Bhasin.Kamalapurkar.ea2013b,

author = {S. Bhasin and R. Kamalapurkar and H. T. Dinh and W.E. Dixon},

journal = {IEEE Trans. Autom. Control},

title = {Robust identification-based state derivative estimation for nonlinear systems},

year = {2013},

month = {Jan.},

number = {1},

pages = {187-192},

volume = {58},

keywords = {RISE, NN, theory, fault},

url1 = {http://ncr.mae.ufl.edu/papers/tac12_4.pdf},

}

@Article{Bhasin.Kamalapurkar.ea2013a,

author = {Shubhendu Bhasin and Rushikesh Kamalapurkar and Marcus Johnson and Kyriakos G. Vamvoudakis and Frank L. Lewis and Warren E. Dixon},

journal = {Automatica},

title = {A novel actor-critic-identifier architecture for approximate optimal control of uncertain nonlinear systems},

year = {2013},

month = {Jan.},

number = {1},

pages = {89-92},

volume = {49},

keywords = {RISE, Optimal, NN, theory, learning},

owner = {wdixon},

timestamp = {2012.07.30},

url1 = {http://ncr.mae.ufl.edu/papers/auto13.pdf},

}

@InBook{Bhasin.Kamalapurkar.ea2013,

author = {Shubhendu Bhasin and Rushikesh Kamalapurkar and Marcus Johnson and Kyriakos G. Vamvoudakis and Frank L. Lewis and Warren E. Dixon},

chapter = {An Actor-Critic-Identifier Architecture for Adaptive Approximate Optimal Control},

editor = {F. L. Lewis and D. Liu},

pages = {258-278},

publisher = {Wiley and IEEE Press},

title = {Reinforcement Learning and Approximate Dynamic Programming for Feedback Control},

year = {2012},

series = {IEEE Press Series on Computational Intelligence},

keywords = {RISE, Optimal, NN, theory, learning},

owner = {wdixon},

timestamp = {2013.12.19},

}

@InProceedings{Bhasin2010,

author = {S. Bhasin and P. Patre and Z. Kan and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Control of a Robot Interacting with an Uncertain Viscoelastic Environment with Adjustable Force Bounds},

year = {2010},

address = {Baltimore, MD},

pages = {5242-5247},

keywords = {Robotpub, Impact, Saturated, NN},

timestamp = {2017.05.08},

}

@Article{Bhasin2011,

author = {S. Bhasin and N. Sharma and P. Patre and W. E. Dixon},

journal = {J. Control Theory Appl.},

title = {Asymptotic Tracking by a Reinforcement Learning-Based Adaptive Critic Controller},

year = {2011},

number = {3},

pages = {400-409},

volume = {9},

keywords = {RISE, NN, theory, learning},

url1 = {http://ncr.mae.ufl.edu/papers/JCTA11.pdf},

}

@InProceedings{Bhasin2010a,

author = {S. Bhasin and N. Sharma and P. Patre and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Robust Asymptotic Tracking of a Class of Nonlinear Systems using an Adaptive Critic Based Controller},

year = {2010},

address = {Baltimore, MD},

pages = {3223-3228},

keywords = {RISE, NN, theory, learning},

}

@PhdThesis{Bialy2014,

author = {B. Bialy},

school = {University of Florida},

title = {Lyapunov-Based Control of Limit Cycle Oscillations in Uncertain Aircraft Systems},

year = {2014},

keywords = {RISE, LCO, Saturated, NN, aeropub},

owner = {wdixon},

timestamp = {2017.05.17},

url1 = {http://ncr.mae.ufl.edu/dissertations/bialy.pdf},

}

@InProceedings{Bialy.Andrews.ea2013,

author = {B. Bialy and L. Andrews and J. Curtis and W. E. Dixon},

booktitle = {Proc. AIAA Guid., Navig., Control Conf., AIAA 2013-4529},

title = {Saturated {RISE} Tracking Control of Store-Induced Limit Cycle Oscillations},

year = {2013},

month = {Aug.},

keywords = {RISE, LCO, Saturated, NN, aeropub},

owner = {wdixon},

timestamp = {2017.05.08},

}

@Article{Bialy.Andrews.ea2014,

author = {B. Bialy and L. Andrews and J. W. Curtis and W. E. Dixon},

journal = {AIAA J. Guid. Control Dyn.},

title = {Saturated Tracking Control of Store-Induced Limit Cycle Oscillations},

year = {2014},

pages = {1316-1322},

volume = {37},

keywords = {RISE, LCO, Saturated, NN, aeropub},

owner = {wdixon},

timestamp = {2017.05.08},

url1 = {http://ncr.mae.ufl.edu/papers/JGCD14.pdf},

}

@Article{Bialy.Chakraborty.ea2016,

Title = {Adaptive Boundary Control of Store Induced Oscillations in a Flexible Aircraft Wing},

Author = {B. Bialy and I. Chakraborty and S. Cekic and W. E. Dixon},

Journal = {Automatica},

Year = {2016},

Pages = {230-238},

Volume = {70},

Keywords = {LCO, PDE, aeropub},

Owner = {wdixon},

Timestamp = {2017.05.08},

Url1 = {http://ncr.mae.ufl.edu/papers/auto163.pdf}

}

@InProceedings{Bialy.Klotz.ea2013,

author = {B. Bialy and J. Klotz and K. Brink and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Lyapunov-Based Robust Adaptive Control of a Quadrotor {UAV} in the Presence of Modeling Uncertainties},

year = {2013},

address = {Washington, DC},

month = {Jun.},

pages = {13-18},

keywords = {RISE, Robotpub, UAV, aeropub},

owner = {wdixon},

timestamp = {2017.05.08},

}

@InProceedings{Bialy.Klotz.ea2012,

Title = {An Adaptive Backstepping Controller for a Hypersonic Air-Breathing Missile},

Author = {B. J. Bialy and J. Klotz and J. W. Curtis and W. E. Dixon},

Booktitle = {Proc. AIAA Guid., Navig., Control Conf., AIAA-2012-4468},

Year = {2012},

Keywords = {Hypersonic, aeropub},

Owner = {Brendan},

Timestamp = {2017.05.08}

}

@Article{Bialy.Pasiliao.ea2014,

Title = {Lyapunov-Based Tracking Control of Store-Induced Limit Cycle Oscillations in an Airfoil Section},

Author = {B. J. Bialy and Crystal L. Pasiliao and H. T. Dinh and W. E. Dixon},

Journal = {ASME J. Dyn. Syst. Meas. Control},

Year = {2014},

Month = {Nov.},

Number = {6},

Volume = {136},

Keywords = {RISE, aeropub},

Owner = {wdixon},

Timestamp = {2017.05.09},

Url1 = {http://ncr.mae.ufl.edu/papers/jdsmc14.pdf}

}

@InProceedings{Bialy.Pasiliao.ea2012,

author = {B. J. Bialy and C. L. Pasiliao and H. T. Dinh and W. E. Dixon},

booktitle = {Proc. ASME Dyn. Syst. Control Conf.},

title = {Lyapunov-Based Tracking of Store-Induced Limit Cycle Oscillations in an Aeroelastic System},

year = {2012},

address = {Fort Lauderdale, Florida},

month = {Oct.},

keywords = {RISE, aeropub},

owner = {Brendan Bialy},

timestamp = {2017.05.08},

}

@MastersThesis{Bouillon2013a,

author = {F. Bouillon},

school = {University of Florida},

title = {Measure, Modeling and Compensation of Fatigue Induced Delay During Neuromuscular Electrical Stimulation},

year = {2013},

keywords = {RISE, NMES, delay, NN, theory},

owner = {wdixon},

timestamp = {2017.05.08},

}

@Article{Braganza2008,

Title = {Tracking Control for Robot Manipulators with Kinematic and Dynamic Uncertainty},

Author = {D. Braganza and W. E. Dixon and D. M. Dawson and B. Xian},

Journal = {Int. J. Robot. Autom.},

Year = {2008},

Pages = {117-126},

Volume = {23},

Keywords = {Robotpub},

Timestamp = {2017.05.08},

Url1 = {http://ncr.mae.ufl.edu/papers/IJRA08.pdf}

}

@InProceedings{Braganza2005,

Title = {Tracking Control for Robot Manipulators with Kinematic and Dynamic Uncertainty},

Author = {D. Braganza and W. E. Dixon and D. M. Dawson and B. Xian},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2005},

Address = {Seville, Spain},

Month = {Dec.},

Pages = {5293-5297},

Keywords = {Robotpub},

Timestamp = {2017.05.08}

}

@InProceedings{Cannataro.Kan.ea2016,

Title = {Followers Distribution Algorithms for Leader-Follower Networks},

Author = {B. Cannataro and Z. Kan and W. E. Dixon},

Booktitle = {IEEE Multi-Conf. Syst. and Control},

Year = {2016},

Keywords = {networkpub},

Owner = {wdixon},

Timestamp = {2017.05.08}

}

@InProceedings{Causey2007,

Title = {Dynamic Target State Estimation for Autonomous Aerial Vehicles using a Monocular Camera System},

Author = {R. S. Causey and S. S. Mehta and R. Lind and W. E. Dixon},

Booktitle = {SAE AeroTech Congr. Exhib.},

Year = {2007},

Address = {Los Angeles, CA},

Month = {Sep.},

Keywords = {UAV, robotpub, vision estimation, aeropub},

Timestamp = {2017.05.08}

}

@PhdThesis{Chakraborty2017,

Title = {Partial Differential Equation Based Control of Nonlinear Systems With Input Delays},

Author = {I. Chakraborty},

School = {University of Florida},

Year = {2017},

Keywords = {aeropub, theory, delay},

Owner = {wdixon},

Timestamp = {2017.11.27},

Url1 = {http://ncr.mae.ufl.edu/dissertations/Indra2017.pdf}

}

@InProceedings{Chakraborty.Mehta.ea2016,

Title = {Compensating for Time-Varying Input and State Delays Inherent to Image-Based Control Systems},

Author = {I. Chakraborty and S. Mehta and J. W. Curtis and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2016},

Pages = {78-83},

Keywords = {vision estimation, aeropub, theory},

Timestamp = {2017.07.12}

}

@InProceedings{Chakraborty.Mehta.ea2017,

Title = {2.5{D} Visual Servo Control in the Presence of Time-Varying State and Input Delays},

Author = {I. Chakraborty and S. Mehta and E. Doucette and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2017},

Keywords = {vision, theory, delay},

Owner = {wdixon},

Timestamp = {2017.05.08}

}

@InProceedings{Chakraborty.Mehta.ea2017a,

Title = {Control of an Input Delayed Uncertain Nonlinear System with Adaptive Delay Estimation},

Author = {I. Chakraborty and S. Mehta and E. Doucette and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2017},

Keywords = {delay, theory},

Owner = {wdixon},

Timestamp = {2017.05.08}

}

@InProceedings{Chakraborty.Obuz.ea2016a,

Title = {Control of an Uncertain Nonlinear System with Known Time-Varying Input Delays with Arbitrary Delay Rates},

Author = {I. Chakraborty and S. Obuz and W. E. Dixon},

Booktitle = {Proc. IFAC Symp. on Nonlinear Control Sys.},

Year = {2016},

Keywords = {delay, PDE, theory},

Owner = {wdixon},

Timestamp = {2017.05.08}

}

@InProceedings{Chakraborty.Obuz.ea2016b,

Title = {Image-Based Tracking Control in the Presence of Time-Varying Input and State Delays},

Author = {I. Chakraborty and S. Obuz and W. E. Dixon},

Booktitle = {IEEE Multi-Conf. Syst. and Control},

Year = {2016},

Keywords = {vision control, delay, pde, theory},

Owner = {wdixon},

Timestamp = {2017.05.08}

}

@InProceedings{Chakraborty.Obuz.ea2016,

Title = {Control of an Uncertain Euler-Lagrange System with Known Time-Varying Input Delay: A PDE-Based Approach},

Author = {I. Chakraborty and S. Obuz and R. Licitra and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2016},

Pages = {4344-4349},

Keywords = {delay, PDE, theory},

Timestamp = {2017.05.08}

}

@PhdThesis{Chen2018,

Title = {A Switched Systems Framework For Nonlinear Dynamic Systems With Intermittent State Feedback},

Author = {Hsi-Yuan Chen},

School = {University of Florida},

Year = {2018},

Keywords = {theory, robotpub, Vision Estimation, switched},

Url1 = {http://ncr.mae.ufl.edu/dissertations/StevenChen2018.pdf}

}

@Article{Chen.Bell.ea2019,

Title = {A Switched Systems Approach to Path Following with Intermittent State Feedback},

Author = {H.-Y. Chen and Z. Bell and P. Deptula and W. E. Dixon},

Journal = {IEEE Trans. Robot.},

Year = {2019},

Number = {3},

Pages = {725-733},

Volume = {35},

Keywords = {robotpub, theory},

Url1 = {http://ncr.mae.ufl.edu/papers/tro19.pdf}

}

@Article{Chen.Bell.ea2018,

Title = {A Switched Systems Framework for Path Following with Intermittent State Feedback},

Author = {H.-Y. Chen and Z. I. Bell and P. Deptula and W. E. Dixon},

Journal = {IEEE Control Syst. Lett.},

Year = {2018},

Month = oct,

Number = {4},

Pages = {749-754},

Volume = {2},

Keywords = {vision estimation, switched, robotpub},

Owner = {wdixon},

Timestamp = {2018.06.01},

Url1 = {http://ncr.mae.ufl.edu/papers/CSL18_3.pdf}

}

@InProceedings{Chen.Bell.ea2017,

author = {H.-Y. Chen and Z. I. Bell and R. Licitra and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Switched Systems Approach to Vision-Based Tracking Control of Wheeled Mobile Robots},

year = {2017},

pages = {4902-4907},

keywords = {robotpub},

owner = {wdixon},

timestamp = {2018.01.11},

}

@InProceedings{Chen2003c,

author = {J. Chen and A. Behal and D. M. Dawson and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Adaptive Visual Servoing in the Presence of Intrinsic Calibration Uncertainty},

year = {2003},

address = {Maui, Hawaii USA},

month = {Dec.},

pages = {5396-5401},

keywords = {Robotpub, vision control},

timestamp = {2017.05.09},

}

@Article{Chen.Dawson.ea2005,

Title = {Adaptive Homography-Based Visual Servo Tracking for Fixed and Camera-in-Hand Configurations},

Author = {J. Chen and D. M. Dawson and W. E. Dixon and A. Behal},

Journal = {IEEE Trans. Control Syst. Technol.},

Year = {2005},

Pages = {814-825},

Volume = {13},

Keywords = {Robotpub, vision control},

Timestamp = {2017.05.08},

Url = {http://ncr.mae.ufl.edu/papers/cst05.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/cst05.pdf}

}

@InProceedings{Chen2003,

Title = {Adaptive Homography-Based Visual Servo Tracking},

Author = {J. Chen and D. M. Dawson and W. E. Dixon and A. Behal},

Booktitle = {Proc. IEEE/RSJ Int. Conf. Intell. Robot. Syst.},

Year = {2003},

Address = {Las Vegas, Nevada},

Month = {Oct.},

Pages = {230-235},

Keywords = {Robotpub, vision control},

Timestamp = {2017.05.08}

}

@Article{Chen2007,

author = {J. Chen and D. M. Dawson and W. E. Dixon and V. Chitrakaran},

journal = {Automatica},

title = {Navigation Function Based Visual Servo Control},

year = {2007},

pages = {1165-1177},

volume = {43},

keywords = {Robotpub, vision control},

timestamp = {2017.05.09},

url = {http://ncr.mae.ufl.edu/papers/auto07.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/auto07.pdf},

}

@InProceedings{Chen2005b,

author = {J. Chen and D. M. Dawson and W. E. Dixon and V. Chitrakaran},

booktitle = {Proc. Am. Control Conf.},

title = {Navigation Function Based Visual Servo Control},

year = {2005},

address = {Portland, Oregon},

month = {Jun.},

pages = {3682-3687},

keywords = {Robotpub, vision control},

timestamp = {2017.05.09},

}

@InProceedings{Chen2004,

author = {J. Chen and D. M. Dawson and W. E. Dixon and V. Chitrakaran},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {An Optimization-Based Approach for Fusing Image-Based Trajectory Generation with Position-Based Visual Servo Control},

year = {2004},

address = {Paradise Island, Bahamas},

month = {Dec.},

pages = {4034-4039},

keywords = {Robotpub, Optimal, vision control},

timestamp = {2017.05.09},

}

@InProceedings{Chen2004a,

author = {J. Chen and W. E. Dixon and D. M. Dawson and V. Chitrakaran},

booktitle = {Proc. IEEE Conf. Control Appl.},

title = {Visual Servo Tracking Control of a Wheeled Mobile Robot with a Monocular Fixed Camera},

year = {2004},

address = {Taipei, Taiwan},

keywords = {Robotpub, WMR, vision control},

timestamp = {2017.05.09},

}

@InProceedings{Chen2005a,

author = {J. Chen and W. E. Dixon and D. M. Dawson and T. Galluzzo},

booktitle = {Proc. IEEE/ASME Int. Conf. Adv. Intell. Mechatron.},

title = {Navigation and Control of a Wheeled Mobile Robot},

year = {2005},

address = {Monterey, CA},

month = {Jul.},

pages = {1145-1150},

keywords = {Robotpub, WMR},

timestamp = {2017.05.09},

}

@InProceedings{Chen2003a,

author = {J. Chen and W. E. Dixon and D. M. Dawson and M. McIntire},

booktitle = {Proc. IEEE/RSJ Int. Conf. Intell. Robot. Syst.},

title = {Homography-Based Visual Servo Tracking Control of a Wheeled Mobile Robot},

year = {2003},

address = {Las Vegas, Nevada},

month = {Oct.},

pages = {1814-1819},

keywords = {Robotpub, WMR, vision control},

timestamp = {2017.05.08},

}

@Article{Chen2006,

author = {J. Chen and W. E. Dixon and D. M. Dawson and M. McIntyre},

journal = {IEEE Trans. Robot.},

title = {Homography-Based Visual Servo Tracking Control of a Wheeled Mobile Robot},

year = {2006},

pages = {406-415},

volume = {22},

keywords = {Robotpub, WMR, vision control},

timestamp = {2017.05.09},

url = {http://ncr.mae.ufl.edu/papers/tr06.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tr06.pdf},

}

@InProceedings{Chen2002,

Title = {Exponential Tracking Control of a Hydraulic Proportional Directional Valve and Cylinder via Integrator Backstepping},

Author = {J. Chen and W. E. Dixon and J. R. Wagner and D. M. Dawson},

Booktitle = {Proc. ASME Int. Mech. Eng. Congr. Expo.},

Year = {2002},

Month = {Nov.},

Keywords = {robotpub}

}

@Article{Cheng.Kan.ea2017,

author = {T. H. Cheng and Z. Kan and J. R. Klotz and J. M. Shea and W. E. Dixon},

journal = {IEEE Trans. Autom. Control},

title = {Event-Triggered Control of Multi-Agent Systems for Fixed and Time-Varying Network Topologies},

year = {2017},

number = {10},

pages = {5365-5371},

volume = {62},

keywords = {Robotpub, Networkpub, theory},

owner = {wdixon},

timestamp = {2017.09.28},

url1 = {http://ncr.mae.ufl.edu/papers/TAC17b.pdf},

}

@Article{Cheng.Wang.ea2016,

author = {T. H. Cheng and Q. Wang and R. Kamalapurkar and H. T. Dinh and M. J. Bellman and W. E. Dixon},

journal = {IEEE Trans. Cybern.},

title = {Identification-based Closed-loop NMES Limb Tracking with Amplitude-Modulated Control Input},

year = {2016},

month = {July},

number = {7},

pages = {1679-1690},

volume = {46},

keywords = {NMES, NN, switched},

owner = {wdixon},

timestamp = {2016.06.28},

url1 = {http://ncr.mae.ufl.edu/papers/cyber16.pdf},

}

@PhdThesis{Cheng2015,

Title = {Lyapunov-Based Switched Systems Control},

Author = {T.-H. Cheng},

School = {University of Florida},

Year = {2015},

Keywords = {switched, networkpub, theory},

Owner = {wdixon},

Timestamp = {2017.05.09},

url1 = {http://ncr.mae.ufl.edu/dissertations/cheng2015.pdf},

}

@InProceedings{Cheng.Downey.ea2013,

Title = {Robust output feedback control of uncertain switched Euler-Lagrange systems},

Author = {T.-H. Cheng and R. Downey and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2013},

Address = {Florence, IT},

Month = {Dec.},

Pages = {4668-4673},

Keywords = {Robotpub, theory},

Owner = {wdixon},

Timestamp = {2017.05.09}

}

@InProceedings{Cheng.Kan.ea2015,

author = {Teng-Hu Cheng and Zhen Kan and Justin Klotz and John Shea and Warren E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Decentralized Event-Triggered Control of Networked Systems- {P}art 1: Leader-Follower Consensus Under Switching},

year = {2015},

pages = {5438-5443},

keywords = {Robotpub, Networkpub, theory},

owner = {wdixon},

timestamp = {2017.05.09},

}

@InProceedings{Cheng.Kan.ea2015a,

author = {Teng-Hu Cheng and Zhen Kan and Justin Klotz and John Shea and Warren E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Decentralized Event-Triggered Control of Networked Systems- {P}art 2: Containment Control},

year = {2015},

pages = {5444-5448},

keywords = {Robotpub, Networkpub, theory},

owner = {wdixon},

timestamp = {2017.05.09},

}

@InProceedings{Cheng.Kan.ea2014,

author = {T.-H. Cheng and Z. Kan and J. A. Rosenfeld and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Decentralized formation control with connectivity maintenance and collision avoidance under limited and intermittent sensing},

year = {2014},

pages = {3201-3206},

keywords = {Robotpub, Networkpub, theory},

owner = {wdixon},

timestamp = {2017.05.09},

}

@InProceedings{Cheng.Kan.ea2014a,

author = {T.-H. Cheng and Z. Kan and J. Shea and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Decentralized Event-Triggered Control for Leader-follower Consensus},

year = {2014},

address = {Los Angeles, CA},

pages = {1244-1249},

keywords = {Robotpub, Networkpub, theory},

owner = {wdixon},

timestamp = {2017.05.09},

}

@InProceedings{Chitrakaran2005a,

Title = {Structure From Motion: A Lyapunov-Based Nonlinear Estimation Approach},

Author = {V. Chitrakaran and D. M. Dawson and J. Chen and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2005},

Address = {Portland, Oregon},

Month = {Jun.},

Pages = {4601-4606},

Keywords = {vision estimation},

Timestamp = {2017.05.09}

}

@Article{Chitrakaran2005,

Title = {Identification of a Moving Object's Velocity with a Fixed Camera},

Author = {V. Chitrakaran and D. M. Dawson and W. E. Dixon and J. Chen},

Journal = {Automatica},

Year = {2005},

Pages = {553-562},

Volume = {41},

Keywords = {vision estimation},

Timestamp = {2017.05.09},

Url = {http://ncr.mae.ufl.edu/papers/auto05.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/auto05.pdf}

}

@InProceedings{Chitrakaran2003,

Title = {Identification of a Moving Object's Velocity with a Fixed Camera},

Author = {V. Chitrakaran and D. M. Dawson and W. E. Dixon and J. Chen},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2003},

Address = {Maui, Hawaii USA},

Month = {Dec.},

Pages = {5402-5407},

Keywords = {vision estimation},

Timestamp = {2017.05.09}

}

@Article{Chwa.Dani.ea2016,

author = {D. Chwa and A. Dani and W. E. Dixon},

journal = {IEEE Trans. Control Syst. Tech.},

title = {Range and Motion Estimation of a Monocular Camera using Static and Moving Objects},

year = {2016},

month = {July},

number = {4},

pages = {1174-1183},

volume = {24},

keywords = {RISE, vision estimation},

owner = {wdixon},

timestamp = {2017.05.09},

url1 = {http://ncr.mae.ufl.edu/papers/cst162.pdf},

}

@InProceedings{Chwa.Dani.ea2012,

author = {Chwa, D. and Dani, A. and Kim, H. and W. E. Dixon},

booktitle = {Proc. of the IEEE Int. Conf. on Systems, Man, and Cybernetics},

title = {Camera Motion Estimation for 3-D Structure Reconstruction of Moving Objects},

year = {2012},

pages = {1788-1793},

keywords = {RISE, vision estimation},

owner = {wdixon},

timestamp = {2017.05.09},

}

@PhdThesis{Cousin2019,

Title = {Hybrid Exoskeletons For Rehabilitation: A Nonlinear Control Approach},

Author = {C. A. Cousin},

School = {University of Florida},

Year = {2019},

Keywords = {NMES, FES, robotpub},

Url1 = {http://ncr.mae.ufl.edu/dissertations/cousin_diss19.pdf}

}

@InProceedings{Cousin.Duenas.ea2018c,

Title = {Admittance Control of Motorized Functional Electrical Stimulation Cycle},

Author = {C. A. Cousin and V. Duenas and C. Rouse and W. E. Dixon},

Booktitle = {Proc. IFAC Conf. Cyber. Phys. Hum. Syst.},

Year = {2018},

Pages = {328-333},

Keywords = {FES, NMES}

}

@Article{Cousin.Rouse.ea2019,

Title = {Controlling the Cadence and Admittance of a Functional Electrical Stimulation Cycle},

Author = {C. A. Cousin and C. A. Rouse and V. H. Duenas and W. E. Dixon},

Journal = {IEEE Trans. Neural Syst. Rehabil. Eng.},

Year = {2019},

Month = {June},

Number = {6},

Pages = {1181-1192},

Volume = {27},

Keywords = {FES, NMES, robotpub},

Url1 = {http://ncr.mae.ufl.edu/papers/tnsre19.pdf}

}

@InProceedings{Cousin.Rouse.ea2017,

Title = {Position and Torque Control via Rehabilitation Robot and Functional Electrical Stimulation},

Author = {C. A. Cousin and C. A. Rouse and V. H. Duenas and W. E. Dixon},

Booktitle = {IEEE Int. Conf. Rehabil. Robot.},

Year = {2017},

Keywords = {robotpub, NMES},

Owner = {wdixon},

Timestamp = {2017.08.02}

}

@InProceedings{Cousin.Deptula.ea2019,

Title = {Cycling With Functional Electrical Stimulation and Adaptive Neural Network Admittance Control},

Author = {C. Cousin and P. Deptula and C. Rouse and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2019},

Pages = {1742-1747},

Keywords = {NMES, FES, NN}

}

@Article{Cousin.Duenas.ea2020,

author = {C. Cousin and V. Duenas and C. Rouse and M. Bellman and P. Freeborn and E. Fox and W. E. Dixon},

journal = {IEEE Trans. Control Sys. Tech.},

title = {Closed-Loop Cadence and Instantaneous Power Control on a Motorized Functional Electrical Stimulation Cycle},

year = {2020},

month = {November},

number = {6},

pages = {2276-2291},

volume = {28},

keywords = {FES, NMES, robotpub},

url1 = {http://ncr.mae.ufl.edu/papers/cst20a.pdf},

}

@InProceedings{Cousin.Duenas.ea2018,

Title = {Admittance Trajectory Tracking using a Challenge-Based Rehabilitation Robot with Functional Electrical Stimulation},

Author = {C. Cousin and V. H. Duenas and C. Rouse and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2018},

Pages = {3732-3737},

Keywords = {robotpub, NMES},

Owner = {wdixon},

Timestamp = {2018.01.22}

}

@InProceedings{Cousin.Duenas.ea2018a,

Title = {Stable Cadence Tracking of Admitting Functional Electrical Stimulation Cycle},

Author = {C. Cousin and V. H. Duenas and C. Rouse and W. E. Dixon},

Booktitle = {Proc. ASME Dyn. Syst. Control Conf.},

Year = {2018},

Keywords = {FES, NMES},

Owner = {wdixon},

Timestamp = {2018.06.05}

}

@InProceedings{Cousin.Duenas.ea2018b,

Title = {Cadence and Admittance Control of a Motorized Functional Electrical Stimulation Cycle},

Author = {C. Cousin and V. H Duenas and C. Rouse and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2018},

Month = dec,

Pages = {6470-6475},

Keywords = {NMES, switched, robotpub, FES}

}

@InProceedings{Cousin.Duenas.ea2017,

Title = {Motorized Functional Electrical Stimulation for Torque and Cadence Tracking: A Switched {L}yapunov Approach},

Author = {C. Cousin and V. H. Duenas and C. Rouse and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2017},

Pages = {5900-5905},

Keywords = {robotpub, NMES},

Owner = {wdixon},

Timestamp = {2018.01.11}

}

@PhdThesis{Dani2011a,

Title = {Lyapunov-Based Nonlinear Estimation Methods With Applications To Machine Vision},

Author = {A. Dani},

School = {University of Florida},

Year = {2011},

Keywords = {vision estimation, theory, RISE},

Owner = {wdixon},

Timestamp = {2017.05.17},

Url1 = {http://ncr.mae.ufl.edu/dissertations/ashwinD.pdf}

}

@Article{Dani2012,

author = {A. Dani and N. Fischer and W. E. Dixon},

journal = {IEEE Trans. Autom. Control},

title = {Single Camera Structure and Motion},

year = {2012},

month = {Jan.},

number = {1},

pages = {241-246},

volume = {57},

keywords = {RISE, vision estimation},

timestamp = {2017.05.09},

url1 = {http://ncr.mae.ufl.edu/papers/tac12.pdf},

}

@Article{DaniT.A.b,

author = {A. Dani and N. Fischer and Z. Kan and W. E. Dixon},

journal = {Mechatron.},

title = {Globally Exponentially Stable Observer for Vision-Based Range Estimation},

year = {2012},

month = {Special Issue on Visual Servoing},

number = {4},

pages = {381-389},

volume = {22},

keywords = {Robotpub, AUV, vision estimation},

timestamp = {2017.05.09},

url1 = {http://ncr.mae.ufl.edu/papers/mech12_1.pdf},

}

@InProceedings{Dani2010a,

Title = {Nonlinear Observer for Structure Estimation using a Paracatadioptric Camera},

Author = {A. Dani and N. Fischer and Z. Kan and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2010},

Address = {Baltimore, MD},

Pages = {3487-3492},

Keywords = {RISE, vision estimation},

Timestamp = {2017.05.09}

}

@InProceedings{Dani2009,

author = {A.P. Dani and N.R. Gans and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Position-Based Visual Servo Control of Leader-Follower Formation Using Image-Based Relative Pose and Relative Velocity Estimation},

year = {2009},

address = {St. Louis, Missouri},

month = {Jun.},

pages = {5271-5276},

keywords = {Robotpub, Networkpub, NN, vision estimation},

timestamp = {2017.05.09},

}

@InProceedings{Dani2011,

Title = {Structure Estimation of a Moving Object Using a Moving Camera: An Unknown Input Observer Approach},

Author = {A. Dani and Z. Kan and N. Fischer and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2011},

Address = {Orlando, FL},

Pages = {5005-5012},

Keywords = {vision estimation}

}

@InProceedings{Dani2010b,

Title = {Structure and Motion Estimation of a Moving Object Using a Moving Camera},

Author = {A. Dani and Z. Kan and N. Fischer and W. E. Dixon},

Booktitle = {Proc. Am. Control Conf.},

Year = {2010},

Address = {Baltimore, MD},

Pages = {6962-6967},

Keywords = {vision estimation}

}

@InProceedings{Dani2010,

author = {A. Dani and K. El Rifai and W. E. Dixon},

booktitle = {Proc. IEEE Int. Symp. Intell. Control},

title = {Globally Exponentially Convergent Observer for Vision-based Range Estimation},

year = {2010},

address = {Yokohama, Japan},

month = {Sep.},

pages = {801-806},

keywords = {Robotpub, vision estimation},

timestamp = {2017.05.09},

}

@InBook{DaniBookChapter,

Title = {Visual Servoing via Advanced Numerical Methods},

Author = {A. P. Dani and W. E. Dixon},

Chapter = {Single Camera Structure and Motion Estimation},

Editor = {G. Chesi and K. Hashimoto},

Pages = {209-229},

Publisher = {Springer},

Year = {2010},

Series = {Lec. Notes in Contr. Inform. Sci.},

Volume = {401},

ISSN = {9781849960885},

Keywords = {vision control},

Timestamp = {2017.05.09},

Url = {http://www.springer.com/engineering/control/book/978-1-84996-088-5}

}

@InBook{Dani.Kan.ea2012,

Title = {Robotic Vision: Technologies for Machine Learning and Vision Applications},

Author = {A. P. Dani and Z. Kan and N. Fischer and W. E. Dixon},

Chapter = {Real-time Structure and Motion Estimation in Dynamic Scenes using a Single Camera},

Editor = {J. Garcia-Rodriguez and M. C. Quevedo},

Pages = {173-191},

Publisher = {IGI Global Publication},

Year = {2012},

Month = {Jul.},

Doi = {10.4018/978-1-4666-2672-0.ch011},

Keywords = {robotpub, vision, theory},

Owner = {wdixon},

Timestamp = {2017.05.09}

}

@InProceedings{Dani2008,

author = {A. P. Dani and S. Velat and C. Crane and N. R. Gans and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Control Appl.},

title = {Experimental Results for an Image-Based Pose and Velocity Estimation Method},

year = {2008},

address = {San Antonio, Texas},

month = {Sep.},

pages = {1159-1164},

keywords = {RISE, vision estimation},

timestamp = {2017.05.09},

}

@PhdThesis{Deptula2019,

Title = {Data-Based Reinforcement Learning: Approximate Optimal Control For Uncertain Nonlinear Systems},

Author = {P Deptula},

School = {University of Florida},

Year = {2019},

Keywords = {Optimal, NN, Learning, robotpub, theory},

Url1 = {http://ncr.mae.ufl.edu/dissertations/deptula2019.pdf}

}

@Article{Deptula.Bell.ea2020,

author = {P. Deptula and Z. Bell and E. Doucette and W. J. Curtis and W. E. Dixon},

journal = {Automatica},

title = {Data-Based Reinforcement Learning Approximate Optimal Control for an Uncertain Nonlinear System with Control Effectiveness Faults},

year = {2020},

month = {June},

pages = {1-10},

volume = {116},

keywords = {Optimal, NN, Learning, robotpub, theory, RT2, jointAF},

url1 = {http://ncr.mae.ufl.edu/papers/Auto20b.pdf},

}

@InProceedings{Deptula.Bell.ea2018,

author = {P. Deptula and Z. I. Bell and E. Doucette and J. W. Curtis and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Data-Based Reinforcement Learning Approximate Optimal Control for an Uncertain Nonlinear System with Partial Loss of Control Effectiveness},

year = {2018},

pages = {2521-2526},

keywords = {Optimal, NN, theory, learning},

owner = {wdixon},

timestamp = {2018.01.22},

}

@InProceedings{Deptula.Bell.ea2018a,

Title = {Single Agent Indirect Herding via Approximate Dynamic Programming},

Author = {P. Deptula and Z. I. Bell and F. Zegers and R. Licitra and W. E. Dixon},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {2018},

Month = dec,

Pages = {7136-7141},

Keywords = {robotpub, herding, adaptive, optimal theory}

}

@Article{Deptula.Chen.ea2020,

author = {P. Deptula and H.-Y. Chen and R. Licitra and J. Rosenfeld and W. E. Dixon},

journal = {IEEE Trans. Robotics},

title = {Approximate Optimal Motion Planning to Avoid Unknown Moving Avoidance Regions},

year = {2020},

number = {2},

pages = {414-430},

volume = {36},

keywords = {Optimal, NN, Learning, robotpub, theory},

url1 = {http://ncr.mae.ufl.edu/papers/tro2020.pdf},

}

@InProceedings{Deptula.Licitra.ea2018,

author = {P. Deptula and R. Licitra and J. A. Rosenfeld and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Online Approximate Optimal Path-Planner in the Presence of Mobile Avoidance Regions},

year = {2018},

pages = {2515-2520},

keywords = {robotpub, Optimal, NN, theory},

owner = {wdixon},

timestamp = {2018.01.22},

}

@Article{Deptula.Rosenfeld.ea2018,

Title = {Approximate Dynamic Programming: Combining Regional and Local State Following Approximations},

Author = {P. Deptula and J. Rosenfeld and R. Kamalapurkar and W. E. Dixon},

Journal = {IEEE Trans. Neural Netw. Learn. Syst.},

Year = {2018},

Month = {June},

Number = {6},

Pages = {2154-2166},

Volume = {29},

Keywords = {Optimal, NN, Learning, robotpub, theory},

Owner = {wdixon},

Timestamp = {2018.05.17},

Url1 = {http://ncr.mae.ufl.edu/papers/TNNLS18.pdf}

}

@InProceedings{Dinh2010,

author = {H. Dinh and S. Bhasin and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Dynamic Neural Network-based Robust Identification and Control of a class of nonlinear Systems},

year = {2010},

address = {Atlanta, GA},

pages = {5536-5541},

keywords = {RISE, NN, theory, learning},

}

@Article{Dinh.Bhasin.ea2017,

Title = {Dynamic Neural Network-Based Output Feedback Tracking Control for Uncertain Nonlinear Systems},

Author = {H. Dinh and S. Bhasin and R. Kamalapurkar and W. E. Dixon},

Journal = {ASME J. Dyn. Syst. Meas. Control},

Year = {2017},

Month = {July},

Number = {7},

Pages = {074502-1-074502-7},

Volume = {139},

Keywords = {learning, NN, OFB, robotpub, theory},

Owner = {wdixon},

Timestamp = {2017.08.02},

Url1 = {http://ncr.mae.ufl.edu/papers/JDSMC17_2.pdf}

}

@PhdThesis{Dinh2012,

author = {H. T. Dinh},

school = {University of Florida},

title = {Dynamic Neural Network-Based Robust Control Methods for Uncertain Nonlinear Systems},

year = {2012},

keywords = {RISE, NN, theory, learning},

owner = {wdixon},

timestamp = {2017.05.09},

url1 = {http://ncr.mae.ufl.edu/dissertations/huyen.pdf},

}

@InProceedings{Dinh.Bhasin.ea2012,

author = {Dinh, H. T. and S. Bhasin and D. Kim and W. E. Dixon},

booktitle = {American Control Conference},

title = {Dynamic Neural Network-based Global Output Feedback Tracking Control for Uncertain Second-Order Nonlinear Systems},

year = {2012},

address = {Montr\'{e}al, Canada},

month = {Jun.},

pages = {6418-6423},

keywords = {Robotpub, NN, theory},

timestamp = {2017-07-06},

}

@InProceedings{Dinh.Fischer.ea2013a,

author = {H. T. Dinh and N. Fischer and R. Kamalapurkar and W. E. Dixon},

booktitle = {Proc. Am. Control Conf.},

title = {Output Feedback Control for Uncertain Nonlinear Systems with Slowly Varying Input Delay},

year = {2013},

address = {Washington, DC},

month = {Jun.},

pages = {1748-1753},

keywords = {NN, theory, delay},

owner = {wdixon},

timestamp = {2017.05.09},

}

@Article{Dinh.Kamalapurkar.ea2014,

author = {H. T. Dinh and R. Kamalapurkar and S. Bhasin and W. E. Dixon},

journal = {Neural Netw.},

title = {Dynamic Neural Network-based Robust Observers for Uncertain Nonlinear Systems},

year = {2014},

month = {Dec.},

pages = {44-52},

volume = {60},

keywords = {NN, theory},

owner = {wdixon},

timestamp = {2017.05.09},

url1 = {http://ncr.mae.ufl.edu/papers/nn14.pdf},

}

@InProceedings{Dinh2011,

author = {H. T. Dinh and R. Kamalapurkar and S. Bhasin and W. E. Dixon},

booktitle = {Proc. IEEE Conf. Decis. Control},

title = {Dynamic Neural Network-based Robust Observers for Second-order Uncertain Nonlinear Systems},

year = {2011},

address = {Orlando, FL},

pages = {7543-7548},

keywords = {NN, theory},

timestamp = {2017.05.09},

}

@InBook{Dixon2020a,

author = {Dixon, W. E.},

editor = {J. Baillieul and T. Samad},

chapter = {Intermittent Image-Based Estimation},

pages = {1-4},

publisher = {Springer},

title = {Encyclopedia of Systems and Control},

year = {2020},

doi = {https://doi.org/10.1007/978-3-030-44184-5},

keywords = {robotpub, vision estimation},

}

@InBook{Dixon2009,

Title = {Encyclopedia of Complexity and Systems Science},

Author = {Dixon, W. E.},

Chapter = {Introduction to Complexity and Nonlinearity in Autonomous Robotics},

Pages = {1224-1226},

Publisher = {Springer},

Year = {2009},

Volume = {2},

ISSN = {9780387758886}

}

@Article{Dixon2007,

author = {Dixon, W. E.},

journal = {IEEE Trans. Autom. Control},

title = {Adaptive Regulation of Amplitude Limited Robot Manipulators with Uncertain Kinematics and Dynamics},

year = {2007},

pages = {488-493},

volume = {52},

keywords = {robotpub, theory},

url = {http://ncr.mae.ufl.edu/papers/tac07.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tac07.pdf},

}

@InProceedings{Dixon2004b,

author = {Dixon, W. E.},

booktitle = {Proc. Am. Control Conf.},

title = {Adaptive Regulation of Amplitude Limited Robot Manipulators with Uncertain Kinematics and Dynamics},

year = {2004},

address = {Boston, Massachusetts},

month = {Jun.},

pages = {3839 - 3844},

keywords = {robotpub, theory},

}

@InProceedings{Dixon2003d,

author = {Dixon, W. E.},

booktitle = {Proc. IEEE/RSJ Int. Conf. Intell. Robot. Syst.},

title = {Teach by Zooming: A Camera Independent Alternative to Teach By Showing Visual Servo Control},

year = {2003},

address = {Las Vegas, Nevada},

month = {Oct.},

pages = {749-754},

keywords = {robotpub, vision control},

}

@Book{Dixon2003,

Title = {Nonlinear Control of Engineering Systems: A Lyapunov-Based Approach},

Author = {W. E. Dixon and A. Behal and D. M. Dawson and S. Nagarkatti},

Publisher = {Birkhauser: Boston},

Year = {2003},

ISSN = {081764265X}

}

@Article{Dixon.Bellman2016,

Title = {Cycling Induced by Functional Electrical Stimulation: A Control Systems Perspective},

Author = {W. E. Dixon and M. Bellman},

Journal = {ASME Dyn. Syst. \& Control Mag.},

Year = {2016},

Month = {Sept},

Number = {3},

Pages = {3-7},

Volume = {4},

Keywords = {FES, NMES, Cycle},

Owner = {wdixon},

Timestamp = {2016.10.03},

Url1 = {http://ncr.mae.ufl.edu/papers/DSCM16.pdf}

}

@Article{Dixon2003b,

author = {W. E. Dixon and J. Chen},

journal = {IEEE Trans. Autom. Control},

title = {Comments on 'A Composite Energy Function-Based Learning Control Approach for Nonlinear Systems with Time-Varying Parametric Uncertainties'},

year = {2003},

pages = {1671-1672},

volume = {48},

keywords = {robotpub, learning, repetitive learning},

url = {http://ncr.mae.ufl.edu/papers/tac03.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tac03.pdf},

}

@InProceedings{Dixon2009a,

Title = {Motion Estimation for Improved Target Tracking with a Network of Cameras},

Author = {W. E. Dixon and C. Crane and R. Kress and F. Bzorgi},

Booktitle = {ANS Annual Meeting},

Year = {2009},

Address = {Atlanta, GA},

Keywords = {networkpub, Vision estimation}

}

@Article{Dixon2002a,

Title = {A MATLAB-Based Control Systems Laboratory Experience for Undergraduate Students: Towards Standardization and Shared Resources},

Author = {W. E. Dixon and D. M. Dawson and B. T. Costic and and M. S. de Queiroz},

Journal = {IEEE Trans. Educ.},

Year = {2002},

Pages = {218-226},

Volume = {45},

Keywords = {Matlab, networkpub},

Url = {http://ncr.mae.ufl.edu/papers/te02.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/te02.pdf}

}

@InProceedings{Dixon2001e,

author = {W. E. Dixon and D. M. Dawson and B. T. Costic and M. S. de Queiroz},

booktitle = {Proc. Am. Control Conf.},

title = {Towards the Standardization of a MATLAB-Based Control Systems Laboratory Experience for Undergraduate Students},

year = {2001},

address = {Arlington, Virginia},

month = {Jun.},

pages = {1161-1166},

keywords = {Matlab, networkpub},

}

@Article{Dixon2000b,

author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu},

journal = {ASME J. Dyn. Syst. Meas. Control},

title = {Tracking and Regulation Control of a Mobile Robot System with Kinematic Disturbances: A Variable Structure-Like Approach},

year = {2000},

pages = {616-623},

volume = {122},

keywords = {Robotpub, WMR},

timestamp = {2017.05.09},

url = {http://ncr.mae.ufl.edu/papers/tasme00.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tasme00.pdf},

}

@InProceedings{Dixon2000h,

author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu},

booktitle = {Proc. IEEE Conf. Control Appl.},

title = {Robust Control of a Mobile Robot System with Kinematic Disturbances},

year = {2000},

address = {Anchorage, Alaska},

month = {Sep.},

pages = {437-442},

keywords = {robotpub, WMR},

}

@Article{Dixon2001a,

author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu and A. Behal},

journal = {IEEE Trans. Syst. Man Cybern. Part B Cybern.},

title = {Adaptive Tracking Control of a Wheeled Mobile Robot via an Uncalibrated Camera System},

year = {2001},

pages = {341-352},

volume = {31},

keywords = {robotpub, WMR, vision control},

url = {http://ncr.mae.ufl.edu/papers/tcyber01.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tcyber01.pdf},

}

@Book{Dixon2000,

author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu and A. Behal},

publisher = {Springer-Verlag London Ltd},

title = {Nonlinear Control of Wheeled Mobile Robots},

year = {2000},

series = {Lecture Notes in Control and Information Sciences},

volume = {262},

issn = {1852334142},

keywords = {Robotpub, WMR},

timestamp = {2017.05.09},

}

@InProceedings{Dixon2000i,

author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu and A. Behal},

booktitle = {Proc. Am. Control Conf.},

title = {Adaptive Tracking Control of a Wheeled Mobile Robot via an Uncalibrated Camera System},

year = {2000},

address = {Chicago, Illinois},

month = {Jun.},

pages = {1493-1497},

keywords = {robotpub, WMR, vision control},

}

@Article{Dixon2000f,

author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu and F. Zhang},

journal = {Int. J. Robust Nonlinear Control},

title = {Robust Tracking and Regulation Control for Mobile Robots},

year = {2000},

pages = {199-216},

volume = {10},

keywords = {robotpub, WMR},

url = {http://ncr.mae.ufl.edu/papers/ijrnc00.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/ijrnc00.pdf},

}

@InProceedings{Dixon1999b,

Title = {Robust Tracking Control of a Mobile Robot System},

Author = {W. E. Dixon and D. M. Dawson and E. Zergeroglu and F. Zhang},

Booktitle = {Proc. IEEE Conf. Control Appl.},

Year = {1999},

Address = {Kohala Coast, Hawaii},

Month = {Aug.},

Pages = {1015-1020},

Keywords = {Robotpub, WMR},

Timestamp = {2017.05.09}

}

@Article{Dixon2000g,

author = {W. E. Dixon and D. M. Dawson and F. Zhang and E. Zergeroglu},

journal = {IEEE Trans. Syst. Man Cybern. Part B Cybern.},

title = {Global Exponential Tracking Control of A Mobile Robot System via a PE Condition},

year = {2000},

pages = {129-142},

volume = {30},

keywords = {robotpub, WMR},

url = {http://ncr.mae.ufl.edu/papers/tcyber00.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tcyber00.pdf},

}

@InProceedings{Dixon1999a,

Title = {Global Exponential Tracking Control of A Mobile Robot System via a PE Condition},

Author = {W. E. Dixon and D. M. Dawson and F. Zhang and E. Zergeroglu},

Booktitle = {Proc. IEEE Conf. Decis. Control},

Year = {1999},

Address = {Phoenix, Arizona},

Month = {Dec.},

Pages = {4822-4827},

Keywords = {Robotpub},

Timestamp = {2017.05.09}

}

@InProceedings{Dixon1999e,

Title = {Global Exponential Setpoint Control of Mobile Robots,Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics,},

Author = {W. E. Dixon and D. M. Dawson and F. Zhang and E. Zergeroglu},

Booktitle = {Proc. IEEE/ASME Int. Conf. Adv. Intell. Mechatron.},

Year = {1999},

Address = {Atlanta, Georgia},

Month = {Sep.},

Pages = {683-688},

Keywords = {Robotpub, WMR},

Timestamp = {2017.05.09}

}

@InProceedings{Dixon2003e,

Title = {Adaptive Range Identification for Exponential Visual Servo Control},

Author = {W. E. Dixon and Y. Fang and D. M. Dawson and J. Chen},

Booktitle = {Proc. IEEE Int. Symp. Intell. Control},

Year = {2003},

Address = {Houston, Texas},

Month = {Oct.},

Pages = {46-51},

Keywords = {vision control}

}

@Article{Dixon2003a,

Title = {Range Identification for Perspective Vision Systems},

Author = {W. E. Dixon and Y. Fang and D. M. Dawson and T. J. Flynn},

Journal = {IEEE Trans. Autom. Control},

Year = {2003},

Pages = {2232-2238},

Volume = {48},

Keywords = {vision estimation},

Url1 = {http://ncr.mae.ufl.edu/papers/tac03_2.pdf}

}

@InProceedings{Dixon2003f,

Title = {Range Identification for Perspective Vision Systems},

Author = {W. E. Dixon and Y. Fang and D. M. Dawson and T. J. Flynn},

Booktitle = {Proc. Am. Control Conf.},

Year = {2003},

Address = {Denver, Colorado},

Month = {Jun.},

Pages = {3448-3453},

Keywords = {vision estimation}

}

@InProceedings{Dixon2005,

author = {W. E. Dixon and T. Galluzzo and G. Hu and C. Crane},

booktitle = {Fifth Int. Workshop on Robot Motion and Control},

title = {Adaptive Velocity Field Control of a Wheeled Mobile Robot},

year = {2005},

address = {Dymaczewo, Poland},

month = {Jun.},

pages = {145-150},

keywords = {robotpub, WMR, Guoqiang},

timestamp = {2016.06.02},

}

@Article{Dixon2000c,

author = {W. E. Dixon and Z. P. Jiang and D. M. Dawson},

journal = {Automatica},

title = {Global Exponential Setpoint Control of Wheeled Mobile Robots: A Lyapunov Approach},

year = {2000},

pages = {1741-1746},

volume = {36},

keywords = {robotpub, WMR},

url = {http://ncr.mae.ufl.edu/papers/auto00.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/auto00.pdf},

}

@InProceedings{Dixon2002b,

author = {W. E. Dixon and L. J. Love},

booktitle = {Proc. ANS Int. Spectr. Conf.},

title = {Lyapunov-based Visual Servo Control for Robotic Deactivation and Decommissioning},

year = {2002},

address = {Reno, Nevada},

month = {Aug.},

keywords = {robotpub, vision control},

}

@InProceedings{Dixon2001b,

Title = {A Simulink-Based Robotic Toolkit for Simulation and Control of the PUMA 560 Robot Manipulator},

Author = {W. E. Dixon and D. Moses and I. D. Walker and D. M. Dawson},

Booktitle = {Proc. IEEE/RSJ Int. Conf. Intell. Robot. Syst.},

Year = {2001},

Address = {Maui, HI},

Month = {Nov.},

Pages = {2202-2207},

Keywords = {robotpub, Simulink}

}

@InProceedings{Dixon2002c,

author = {W. E. Dixon and M. S. de Queiroz and D. M. Dawson},

booktitle = {Proc. IEEE Int. Conf. Robot. Autom.},

title = {Adaptive Tracking and Regulation Control of a Wheeled Mobile Robot with Controller/Update Law Modularity},

year = {2002},

address = {Washington, DC},

month = {May},

pages = {2620-2625},

keywords = {robotpub, theory, WMR},

}

@Article{Dixon2004a,

author = {W. E. Dixon and M. S. de Queiroz and D. M. Dawson and T. J. Flynn},

journal = {IEEE Trans. Control Syst. Technol.},

title = {Adaptive Tracking and Regulation Control of a Wheeled Mobile Robot with Controller/Update Law Modularity},

year = {2004},

pages = {138-147},

volume = {12},

keywords = {robotpub, theory, WMR},

url = {http://ncr.mae.ufl.edu/papers/cst04.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/cst04.pdf},

}

@Article{Dixon1999,

Title = {Tracking Control of Robot Manipulators with Bounded Torque Inputs},

Author = {W. E. Dixon and M. S. de Queiroz and D. M. Dawson and F. Zhang},

Journal = {Robotica},

Year = {1999},

Pages = {121-129},

Volume = {17},

Keywords = {Robotpub},

Timestamp = {2017.05.09},

Url1 = {http://ncr.mae.ufl.edu/papers/robo99_2.pdf}

}

@InProceedings{Dixon1998a,

Title = {Tracking Control of Robot Manipulators with Bounded Torque Inputs},

Author = {W. E. Dixon and M. S. de Queiroz and D. M. Dawson and and F. Zhang},

Booktitle = {Proc. IASTED Int. Conf. Robot. Manuf.},

Year = {1998},

Address = {Banff, Canada},

Month = {Jul.},

Pages = {112-115},

Keywords = {Robotpub},

Timestamp = {2017.05.09}

}

@InProceedings{Dixon2001d,

author = {W. E. Dixon and I. D. Walker and D. M. Dawson},

booktitle = {Proc. IEEE/ASME Int. Conf. Adv. Intell. Mechatron.},

title = {Fault Detection for Wheeled Mobile Robots with Parametric Uncertainty},

year = {2001},

address = {Como, Italy},

month = {Jul.},

pages = {1245-1250},

keywords = {robotpub, WMR, fault},

}

@Article{Dixon2000a,

author = {W. E. Dixon and I. D. Walker and D. M. Dawson and J. P. Hartranft},

journal = {IEEE Trans. Robot. Autom.},

title = {Fault Detection for Robot Manipulators with Parametric Uncertainty: A Prediction Error Based Approach},

year = {2000},

pages = {689-699},

volume = {16},

keywords = {Robotpub, fault},

timestamp = {2017.05.09},

url = {http://ncr.mae.ufl.edu/papers/tra00.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tra00.pdf},

}

@InProceedings{Dixon2000j,

author = {W. E. Dixon and I. D. Walker and D. M. Dawson and J. P. Hartranft},

booktitle = {Proc. IEEE Int. Conf. Robot. Autom.},

title = {Fault Detection for Robotic Manipulators with Parametric Uncertainty: A Prediction Error Based Approach},

year = {2000},

address = {San Francisco, California},

month = {Apr.},

pages = {3628-3634},

keywords = {robotpub, fault},

}

@Article{Dixon2001,

Title = {Comments on Adaptive Variable Structure Setpoint Control of Underactuated Robots},

Author = {W. E. Dixon and E. Zergeroglu},

Journal = {IEEE Trans. Autom. Control},

Year = {2001},

Pages = {812},

Volume = {46},

Keywords = {Robotpub},

Url = {http://ncr.mae.ufl.edu/papers/tac01.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/tac01.pdf}

}

@Article{Dixon2000d,

author = {W. E. Dixon and E. Zergeroglu},

journal = {IEEE Trans. Autom. Control},

title = {Comments on Sliding-Mode Motion/Force Control of Constrained Robots},

year = {2000},

pages = {1576-1577},

volume = {45},

keywords = {robotpub, theory},

url = {http://ncr.mae.ufl.edu/papers/tac00.pdf},

url1 = {http://ncr.mae.ufl.edu/papers/tac00.pdf},

}

@InProceedings{Dixon2001c,

author = {W. E. Dixon and E. Zergeroglu and D. Dawson and B. T. Costic},

booktitle = {Proc. IEEE Conf. Control Appl.},

title = {Repetitive Learning Control: A Lyapunov-Based Approach},

year = {2001},

address = {Mexico City, Mexico},

month = {Sep.},

pages = {530-535},

keywords = {robotpub, theory, learning, repetitive learning},

}

@Article{Dixon2004,

Title = {Global Robust Output Feedback Tracking Control of Robot Manipulators},

Author = {W. E. Dixon and E. Zergeroglu and D. M. Dawson},

Journal = {Robotica},

Year = {2004},

Pages = {351-357},

Volume = {22},

Keywords = {Robotpub},

Url = {http://ncr.mae.ufl.edu/papers/robo04.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/robo04.pdf}

}

@Article{Dixon2002,

author = {W. E. Dixon and E. Zergeroglu and D. M. Dawson and B. T. Costic},

journal = {IEEE Trans. Syst. Man Cybern. Part B Cybern.},

title = {Repetitive Learning Control: A Lyapunov-Based Approach},

year = {2002},

pages = {538-545},

volume = {32},

keywords = {robotpub, theory, learning, repetitive learning},

url1 = {http://ncr.mae.ufl.edu/papers/tcyber02.pdf},

}

@Article{Dixon2000e,

Title = {Global Adaptive Partial State Feedback Tracking Control of Rigid-Link Flexible-Joint Robots},

Author = {W. E. Dixon and E. Zergeroglu and D. M. Dawson and M. W. Hannan},

Journal = {Robotica},

Year = {2000},

Pages = {325-326},

Volume = {18},

Keywords = {Robotpub},

Url = {http://ncr.mae.ufl.edu/papers/robo00-2.pdf},

Url1 = {http://ncr.mae.ufl.edu/papers/robo00_2.pdf}

}

@InProceedings{Dixon1999c,

Title = {Global Adaptive Partial State Feedback Tracking Control of Rigid-Link Flexible-Joint Robots},

Author = {W. E. Dixon and E. Zergeroglu and D. M. Dawson and M. W. Hannan},

Booktitle = {Proc. IEEE/ASME Int. Conf. Adv. Intell. Mechatron.},

Year = {1999},

Address = {Atlanta, Georgia},

Month = {Sep.},

Pages = {281-286},

Keywords = {Robotpub},

Timestamp = {2017.05.09}

}

@InProceedings{Dixon1999d,

Title = {Experimental Video of a Controller for Flexible Joint Robots},

Author = {W. E. Dixon and E. Zergeroglu and D. M. Dawson and M. W. Hannan},

Booktitle = {Proc. IEEE/ASME Int. Conf. Adv. Intell. Mechatron.},

Year = {1999},

Address = {Atlanta, Georgia},

Month = {Sep.},

Keywords = {Robotpub},

Timestamp = {2017.05.09}

}

@InProceedings{Dixon1998b,

Title = {Global Output Feedback Tracking Control for Rigid-Link Flexible-Joint Robots},

Author = {W. E. Dixon and E. Zergeroglu and D. M. Dawson and M. S. de Queiroz},

Booktitle = {Proc. IEEE Int. Conf. Robot. Autom.},

Year = {1998},

Address = {Leuven, Belgium},

Month = {May},

Pages = {498-504},

Keywords = {Robotpub},

Timestamp = {2017.05.09}

}

@InProceedings{Dixon2002d,

author = {W. E. Dixon and E. Zergeroglu and Y. Fang and D. M. Dawson},

booktitle = {Proc. IEEE Int. Conf. Robot. Autom.},

title = {Object Tracking by a Robot Manipulator: A Robust Cooperative Visual Servoing Approach},

year = {2002},

address = {Washington, DC},

month = {May},

pages = {211-216},

keywords = {robotpub, vision control},

}

@InProceedings{Dixon1998,

Title = {Global Robust Output Feedback Tracking Control of Robot Manipulators},

Author = {W. E. Dixon and F. Zhang and D. M. Dawson and A. Behal},

Booktitle = {Proc. IEEE Conf. Control Appl.},

Year = {1998},

Address = {Trieste, Italy},

Month = {Sep.},

Pages = {897-901},

Keywords = {Robotpub},

Timestamp = {2017.05.09}

}

@PhdThesis{Downey2015,

Title = {Asynchronous Neuromuscular Electrical Stimulation},

Author = {R. Downey},

School = {University of Florida},

Year = {2015},

Keywords = {NMES, RISE, switched},

Owner = {wdixon},

Timestamp = {2012.08.27},

Url1 = {http://ncr.mae.ufl.edu/dissertations/sank.pdf}

}

@InBook{Downey.Kamalapurkar.ea2015,

author = {R. Downey and R. Kamalapurkar and N. Fischer and W. E. Dixon},

chapter = {Compensating for Fatigue-Induced Time-Varying Delayed Muscle Response in Neuromuscular Electrical Stimulation Control},

editor = {I. Karafyllis and M. Malisoff and F. Mazenc and P. Pepe},

pages = {143-161},